University of Richmond faculty are spearheading a new AI project in hopes of making artificial intelligence more accessible and convenient to all students.

While the use of generative AI in an academic setting has been widely debated, it can have many uses in the classroom. The new UR platform, SpiderAI, allows users to incorporate generative AI into their teaching, research, and learning.

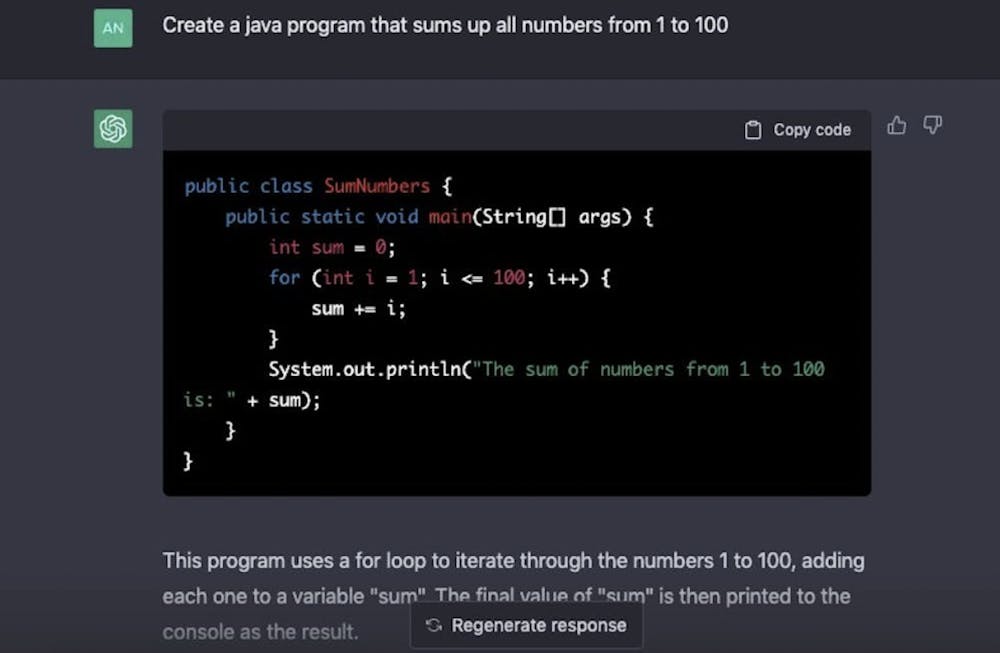

Generative AI, unlike other forms of artificial intelligence, produces and creates new content, whereas traditional AI is limited to simply analyzing the data that already exists. This gives generative AI a wider range of capabilities, while also creating greater risk.

SpiderAI was first developed in the 2023-2024 academic year as part of the Faculty Hub’s Faculty Learning Community on Generative AI, and was released this fall in its beta stage.

“We wanted to give faculty access to high-end AI services like ChatGPT, and we were trying to figure out how to pay for that,” said Andrew Bell, a technology consultant in the Teaching and Scholarship Hub and operations manager for the Faculty Hub.

SpiderAI bypasses the usual expenses like paid subscriptions many AI platforms have incorporated by providing the best version to all students for free, Saif Mehkari, a professor of economics at UR, said.

SpiderAI features functions from a wide array of existing generative AI platforms, Bell said.

“SpiderAI gives instructional staff and faculty and students that are given access by their professors, access to frontier models by OpenAI, so GPT−40, 40Mini, Anthropics, Claude, Sonnet, Opus, and then Google′sGemini,” Bell said. “And there′s also some transcription services in there so you can transcribe speech to text. You can create speech from text to speech.”

The spectrum of SpiderAI’s functionalities is highlighted in how it has been used in the classroom.

“I had a student the other day who was struggling with algebra and used ChatGPT to both create questions and answers to algebra questions to practice for the class,” Mehkari said. “We've seen students use it to create images and videos for presentations. So, there are lots and lots of different uses. We've heard that some people are using it in their second language classes to understand translations.”

SpiderAI presents seemingly endless possibilities, inevitably raising the question of how it can be abused to avoid having to do the actual work. However, SpiderAI was envisioned differently, Bell said.

“We want to think about these tools as ways to enhance learning, not to create tools for our students to circumvent the things that we are asking them to do,” he said.

Enjoy what you're reading?

Signup for our newsletter

While sites like ChatGPT have become synonymous with being used for the wrong reasons, SpiderAI was built with strong safeguards. SpiderAI was originally only accessible to faculty and professors. Though this has changed, students can only be granted access to SpiderAI by an instructor. Once allowed access by their instructor, students can make an account on SpiderAI, which gives them open access and 30,000 credits. Asking the most basic model of SpiderAI to generate sample algebra questions costs less than one credit while asking the AI to transcribe a four-minute conversation costs around 30 credits.

Only a faculty member can issue the invite codes to students that give them access to SpiderAI, Bell said.

Students can interact with chats made by their professors, but they can't create custom chats. They are limited to the functions that have already been put onto SpiderAI, such as ChatGPT and Google Gemini, but cannot add any new functions.

There are also other barriers built into the system to make sure that students use SpiderAI appropriately. Searches on SpiderAI are anonymous, but safety measures are still in place.

Senior Hung Pham works in the Teaching and Learning Center and the Quantitative Research Center, where he has used and helped others to use SpiderAI.

“If there is anything that is super dangerous that people look up, it's flagged,” Pham said. “If something gets flagged, then it can be tracked.”

Allowing greater access to generative AI will come with some inherent risks. From Silicon Valley to Capitol Hill, the use of generative AI has become standard across many fields, and its uses extend far beyond the classroom, Bell said.

“We′re committed to helping students figure out what the future looks like, right? And I think that one of the things we hear from students is that they want to be prepared for a workforce that expects a certain level of AI literacy,” Bell said.

With these precautions in place, the use of SpiderAI will continue to expand, in both its functions and the extent of its use. The role of generative AI is only increasing over the long term, Mehkari said.

“I think the content of what you teach is going to change, and the administrative tasks that we do are going to change. There are prototypes out there of auto-replying to emails and prototypes out there of academic support software that are AI-based. I think we are slowly getting there. We’re not there yet in many areas, but we will get there,” Mehkari said.

Contact writer Jeremy Young at jeremy.young@richmond.edu

Support independent student media

You can make a tax-deductible donation by clicking the button below, which takes you to our secure PayPal account. The page is set up to receive contributions in whatever amount you designate. We look forward to using the money we raise to further our mission of providing honest and accurate information to students, faculty, staff, alumni and others in the general public.

Donate Now